How to Build Production-Ready Agentic RAG Systems That Actually Work

Seven critical decisions made during implementation determine whether a RAG system succeeds or collapses under real-world usage. Read the article to discover what these decisions are and how to get each one right.

During real-life implementation of RAG, companies face the same problem: systems that work perfectly during testing begin to fail in production. A study of three real-world RAG implementations revealed that success or failure of a system depends on seven critical architectural decisions made during development.

This pattern repeats across production RAG deployments. Academic research analyzing three real-world RAG systems identified seven distinct failure points that cause production issues. The issue isn't the technology. It's the architecture.

Seven critical decisions made during implementation determine whether a RAG system succeeds or collapses under real-world usage. Most tutorials overlook these choices or suggest simplified methods that work for demos but can't scale. Softcery's guide connects each failure mode to a specific architectural decision and offers a framework for testing RAG quality before users experience issues.

Why RAG Prototypes Fail in Production

Prototypes get built for best-case scenarios. Production exposes diverse queries, messy documents, and scale issues. The common naive stack fixed chunking plus default embeddings plus top-5 vector search works until real users arrive.

A peer-reviewed study, analyzing production RAG systems found a crucial thing: "Validation of a RAG system is only feasible during operation." The robustness of these systems evolves rather than getting designed in at the start.

We’ll walk you through these seven critical failure points:

- Missing Content: DThe question cannot be answered from available documents, so the system hallucinates instead of admitting ignorance.

- Missed Top-Ranked Documents: The answer exists but ranks 6-10 in relevance. Only the top-5 get returned.

- Not in Context: Retrieved documents don't make it into the generation context window due to size limits.

- Not Extracted: The answer sits in the context, but the LLM fails to extract it correctly because of too much noise.

- Wrong Format: The LLM ignores output format instructions, returning plain text when a table was requested.

- Incorrect Specificity: The answer is too general or too specific for what the user actually needs.

- Incomplete Answers: The response misses available information that should have been included.

Each failure point maps to a specific architectural decision. Problems that weren't designed out from the start can't be fixed easily in production. A systematic approach to these decisions matters more than following tutorials.

The 7 Critical Decisions That Determine Success

All the mentioned above decisions are connected.

Decision 1: Chunking Strategy

The Naive Approach: Fixed 500-token chunks with 50-token overlap. Works fine in demos with clean markdown documents.

Production Reality: Tables split mid-row, breaking semantic meaning. Code blocks fragment across chunks. Hierarchical documents lose their structure. Mixed content in PDFs causes inconsistent chunking.

The Decision Framework:

Adapting your chunking strategy depends on the type of document you’re working with. If you treat all document types the same (for example, using fixed 500-token chunks for everything), you lose meaning and context

If you just convert a table into plain text (e.g., a CSV), the relationships between cells, like which value belongs to which column , simply get lost. Instead, use LLM-based transformation that preserves the table’s row-column structure and logical connections.

Fixed chunking may split functions or classes in half, which makes the code meaningless for the model. Use semantic chunking — chunking based on logical boundaries in code (like full functions or methods).

PDFs can have multiple columns, tables, images, and other mixed content that simple text extractors can’t handle correctly. Use specialized tools such as:

- LlamaParse – handles complex layouts (multi-column, tables, charts, etc.)

- PyMuPDF – works for simpler PDFs.

Advanced: Semantic and Clustering Chunking

Fixed-size chunking splits text arbitrarily at character or token limits, breaking semantic units mid-sentence or mid-concept. A 500-token chunk might include half of a product description and half of an unrelated section, forcing embeddings to represent multiple disconnected ideas.

Semantic chunking uses embedding similarity to detect topic boundaries. The process: embed each sentence, measure similarity between consecutive sentences, split when similarity drops below threshold (typically 0.5-0.7). This creates variable-size chunks that preserve complete semantic units.

Clustering chunking extends this by grouping semantically similar sentences across the entire document, even when they're not adjacent. Related information gets consolidated into coherent chunks regardless of original document structure.

Performance benefits: preserves complete thoughts and concepts, reduces context fragmentation, improves retrieval precision. Trade-offs: 10-100x slower processing, variable chunk sizes complicate context window management, threshold tuning required per document type.

Decision 2: Embedding Model Selectioт

The Naive Approach: Default OpenAI Embeddings (text-embedding-ada-002). Works for general business documents.

Production Reality: Legal terminology gets treated similarly "revenue recognition" and "revenue growth" embed nearly identically despite having completely different meanings. Medical jargon, technical specifications, and domain-specific terms miss semantic nuances that matter.

The Decision Framework:

Generic models like OpenAI's text-embedding-3-small cost $0.02 per million tokens. BAAI/bge-base-en-v1.5 is free with 768 dimensions. These work well for general content and initial prototypes.

Fine-tuned models show a 7% retrieval accuracy improvement with just 6,300 training samples. A 128-dimension fine-tuned model can outperform a 768-dimension baseline by 6.51% while being six times smaller. The cost: one-time embedding generation plus storage that scales with corpus size.

Selection Resources: The MTEB benchmark covers 56 embedding tasks. NVIDIA's NV-Embed leads at 69.32. The BEIR benchmark tests across 18 datasets and 9 task types. But don't rely on global averages match the benchmark to the actual use case.

When to Fine-Tune: High domain terminology density in legal, medical, or technical content justifies fine-tuning. Large query-document semantic gaps benefit from it. But validate that retrieval is the bottleneck first, not chunking or generation.

Decision 3: Retrieval Approach Hybrid Search

The Naive Approach: Pure vector similarity search, returning the top-5 chunks. Works with narrow test queries.

Production Reality: Exact keyword matches get missed product IDs, proper names, numbers. Diverse user query patterns surface edge cases. Relevant content ranks 6-10 because vector similarity is imperfect.

Why Vector Search Alone Isn't Enough: A single vector condenses complex semantics, losing context. Domain-specific terminology causes struggles. Similar terms in specific domains can't be distinguished reliably.

The Hybrid Search Solution:

BM25 (keyword search) excels at exact matches for names, IDs, and numbers. Term frequency ranking is fast and well-understood.

Vector search captures semantic meaning and context understanding. It handles query reformulation naturally.

Implementation means running both searches in parallel, then combining results with Reciprocal Rank Fusion. Benchmark testing consistently shows that hybrid queries produce the most relevant results.

Configuration Guidance: Retrieve the top-20 from each method. Rerank the combined results. Adjust fusion weights based on evaluation metrics.

Hybrid search isn't optional for production systems. It's the baseline that prevents the "Missed Top-Ranked Documents" failure point.

Advanced: Query Transformation Techniques

Beyond improving retrieval methods, transforming the query itself can significantly boost results:

HyDE (Hypothetical Document Embeddings): Generate a hypothetical answer to the query, embed the hypothetical answer instead of the query, then retrieve documents matching the answer embedding. Document-to-document matching in vector search works better than query-to-document matching for many use cases.

Query Expansion: Rewrite the query in multiple ways, decompose complex queries into sub-queries, generate variations with different terminology, then merge results from all variations. Particularly effective when users phrase questions inconsistently.

Query Routing: Classify query intent, route to the appropriate index or database, enable conditional retrieval only when needed, and coordinate multiple sources. Essential for systems with heterogeneous data sources.

When to Use: Ambiguous queries are common in the domain, multiple knowledge sources exist, complex multi-intent queries occur frequently. Implement after fixing basic retrieval quality first.

Decision 4: Reranking Strategy

The Naive Approach: No reranking trust the initial retrieval order. Saves latency and cost.

Production Reality: Vector similarity doesn't equal actual relevance for user queries. First-pass retrieval optimizes for recall, not precision. High-stakes applications can't tolerate relevance errors.

Cross-Encoder Reranking:

Stage one: a fast retriever narrows candidates to the top-20. Stage two: a cross-encoder reranks by processing query and document together. The attention mechanism across both inputs produces higher accuracy.

Performance data from the BEIR benchmark: Contextual AI's reranker scored 61.2 versus Voyage-v2's 58.3 (2.9% improvement). Other strong options include Cohere's rerank models and BAAI/bge-reranker-v2-m3. Anthropic's contextual retrieval combined with reranking achieved a 67% failure rate reduction.

Trade-offs: Latency increases by a few hundred milliseconds if unoptimized. Cost becomes significant for high-throughput systems (thousands of queries per second multiplied by 100 documents). The accuracy improvement is worth it for precision-critical applications.

When to Use Reranking: Legal search and B2B databases where stakes are high. Applications requiring fine-grained relevance. After fixing initial retrieval quality don't use reranking to compensate for poor initial retrieval.

When to Skip: Low-stakes applications like internal search or FAQs. Tight latency budgets under 200ms. Initial retrieval already achieving precision above 80%.

Decision 5: Context Management

The Naive Approach: Dump all retrieved chunks into the prompt. More context equals better answers, right?

Production Reality: The context window fills with redundant information. Conflicting information from multiple chunks confuses the LLM. Token costs scale with context size, and output tokens run 3-5x more expensive than input tokens. Retrieved doesn't equal utilized this is Failure Point #3 from the research.

Context Window Constraints: GPT-4o has 128,000 tokens total. About 750 words equals 1,000 tokens. The budget must cover the system prompt, retrieved chunks, user query, and response.

Chunk Count Strategy: Too few chunks (top-3) means missing information and incomplete answers. Too many (top-20) creates noise, cost, and confusion. The sweet spot: 5-10 for most applications, determined empirically through testing.

Context Optimization Techniques:

Deduplication removes semantically similar chunks. Prioritization ranks by relevance score and fills context in order. Summarization condenses lower-ranked chunks. Hierarchical retrieval uses small chunks for search but expands to parent chunks for context.

Contextual Retrieval

Traditional chunking loses context. "The company saw 15% revenue growth" becomes unclear which company? Which quarter? Baseline systems show a 5.7% top-20-chunk retrieval failure rate due to this context loss.

The solution: generate 50-100 token explanatory context for each chunk before embedding. The prompt: "Situate this chunk within the overall document for search retrieval purposes." This context gets prepended to the chunk before embedding and indexing.

Performance Results:

- Contextual embeddings alone: 35% failure reduction (5.7% → 3.7%)

- Adding contextual BM25: 49% reduction (5.7% → 2.9%)

- Adding reranking: 67% reduction (5.7% → 1.9%)

Implementation Cost: $1.02 per million document tokens, one-time. Prompt caching cuts costs by 90% for subsequent processing.

When to Implement: After validating chunking and retrieval strategies first. When context loss is a measurable issue affecting retrieval quality. When budget allows the one-time context generation cost. The performance gains justify the investment for most production systems.

Decision 6: Evaluation Framework

The Naive Approach: Manual spot-checks on 10-20 test queries. It looks good in the demo, so ship it.

Production Reality: Edge cases hide in thousands of queries. Bad answers could stem from retrieval or generation, with no way to tell which. No systematic detection catches failure modes affecting 5-10% of users. Systems showing 80% accuracy in testing drop to 60% in production.

Critical Insight: Separate Retrieval from Generation

Phase 1: Retrieval Quality Evaluation

Metrics to track:

Test approach: Build 50-100 test queries with known relevant documents. Test at k=5, 10, and 20. Measure separately from generation.

Phase 2: Generation Quality Evaluation

Metrics to track:

Test approach: Same test queries with known good retrieved context. LLM-as-judge for hallucination detection. Check for contradictions versus unsupported claims.

Phase 3: End-to-End System Quality

Metrics to track:

Tools and Frameworks: RAGAS and DeepEval provide component-level evaluation with CI/CD integration. Evidently has 25M+ downloads and supports development plus production monitoring. TruLens offers domain-specific optimizations.

The evaluation framework catches problems before users do. It enables systematic debugging of failures (retrieval versus generation). It prevents regression in the CI/CD pipeline.

Decision 7: Fallback and Error Handling

The Naive Approach: Assume retrieval always finds relevant content. Let the LLM generate an answer from whatever got retrieved.

Production Reality: Some queries have no relevant documents (Failure Point #1). Some documents can't be chunked properly (malformed PDFs, complex tables). Empty retrievals lead to hallucinations.

The Decision Framework:

Empty Retrieval Handling: Detect when retrieval scores fall below threshold or return no results. Response: "I don't have information about that in my knowledge base." Alternative: offer to connect to a human or search broader sources.

Conflicting Information Handling: Detect when retrieved chunks contradict each other. Response: present multiple viewpoints with sources. Don't let the LLM arbitrarily choose or synthesize conflicting facts.

Parsing Failure Handling: Detect document extraction errors and malformed chunks. Response: log for manual review, exclude from retrieval. Monitor parsing failure rate by document type.

Low-Confidence Responses: Detect low retrieval scores or high generation uncertainty. Response: "I found some information but am not confident about..." Include source citations for user verification.

Hallucination Detection in Production: Real-time LLM-as-judge checking groundedness. Async batch analysis of responses. Tools like Galileo AI block hallucinations before serving. Datadog flags issues within minutes.

8 Critical Test Scenarios: Retrieval quality and relevance. Noise robustness. Negative rejection (knowing when not to answer). Information integration from multiple documents. Handling ambiguous queries. Privacy breach prevention. Illegal activity prevention. Harmful content generation prevention.

Negative rejection addresses Failure Point #1 directly the system must know when it doesn't know.

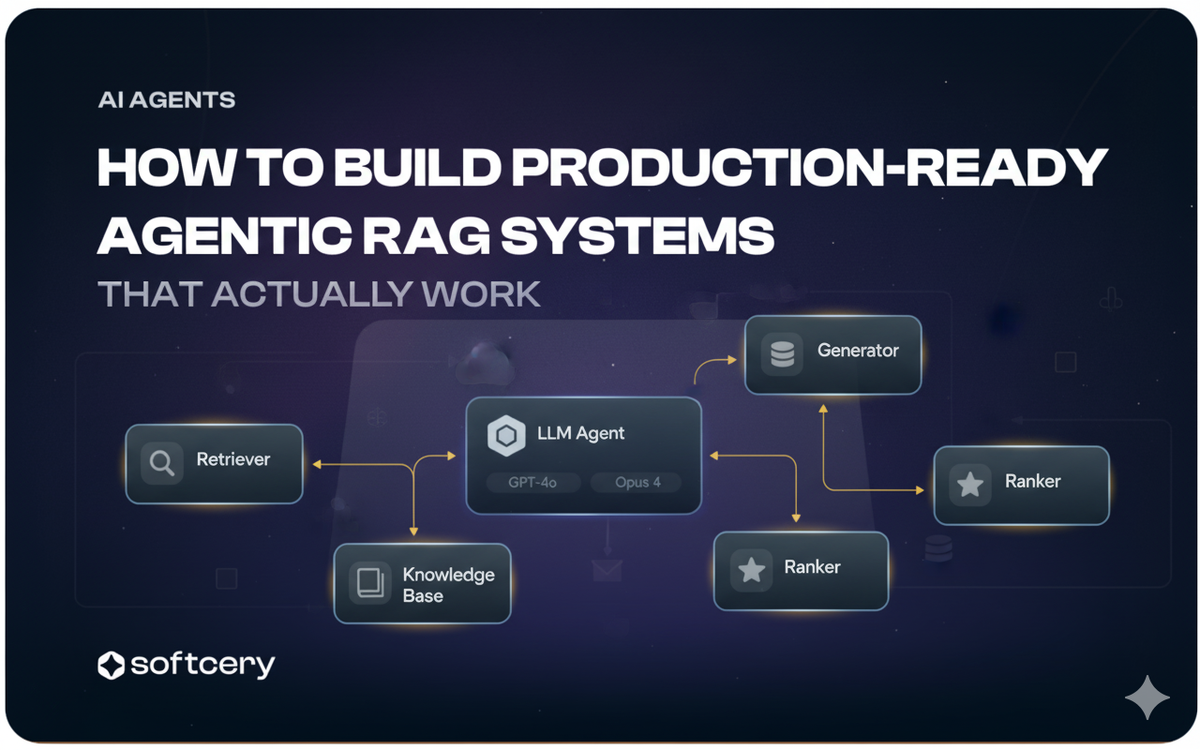

When to Consider Agentic RAG Architecture

The seven decisions above assume a traditional RAG architecture: query → retrieve → generate. But some applications need dynamic, multi-step workflows that adapt based on intermediate results.

What Agentic RAG Enables:

Autonomous AI agents manage retrieval strategies through reflection, planning, tool use, and multi-agent collaboration. The system dynamically adapts workflows to task requirements rather than following a fixed pipeline.

Single vs. Multi-Agent:

Single-agent: a router with 2+ sources decides which to query based on query classification. Multi-agent: specialized agents per domain (legal, technical, business) with a master coordinator orchestrating between them.

When to Consider:

Multiple heterogeneous data sources that require different retrieval strategies. Complex workflows requiring reasoning (e.g., "Find the contract, extract clauses, compare to policy, summarize conflicts"). Async tasks like comprehensive research, multi-document summarization, or data synthesis. After mastering basic RAG agentic patterns add complexity that only pays off when traditional RAG isn't sufficient.

Frameworks:

LangChain and LangGraph for complex workflow orchestration. LlamaIndex for retrieval-focused agents. AutoGen and Crew AI for multi-agent collaboration.

Trade-offs:

Higher latency (multiple retrieval rounds). More complex debugging (agent decisions aren't always predictable). Increased cost (multiple LLM calls per query). Greater maintenance overhead.

Start with traditional RAG. Move to agentic patterns only when the application clearly requires dynamic, multi-step reasoning that can't be achieved with query transformation or structured retrieval pipelines.

Testing Framework: Validate Before Launch

Academic research states that "validation is only feasible during operation." True, but the right decisions can be validated before full production. This framework catches 80% of issues pre-launch, reducing production surprises from 60% to under 10%.

Phase 1: Retrieval Quality Testing

Test Set Construction: 50-100 queries representing diverse use cases. Include specific facts, broad concepts, and multi-part questions. Label known relevant documents for each query. Cover edge cases observed in staging or beta.

Metrics to Measure:

| Metric | Target | Interpretation |

|---|---|---|

| Precision@5 | 80% | Of top-5, 4+ are relevant |

| Precision@10 | 70% | Of top-10, 7+ are relevant |

| Recall@10 | 70% | Found 7/10 relevant docs |

| Contextual Precision | 85% | Relevant chunks ranked high |

| Contextual Recall | 75% | All needed info retrieved |

| Retrieval Latency (p95) | <500ms | Fast enough for interactive |

Testing Procedure: Run retrieval only, no generation. Measure each metric across the test set. Identify failure patterns what types of queries fail? Adjust chunking, embeddings, retrieval strategy, or k value. Re-test until targets are met.

Common Failures and Fixes: Low precision → improve embeddings or add reranking. Low recall → increase k, improve chunking, implement hybrid search. High latency → optimize vector DB, reduce k, add caching.

Phase 2: Generation Quality Testing

Test Approach: Use the same test queries with known good retrieved context. This isolates generation from retrieval issues. Synthetic good context enables controlled testing.

Metrics to Measure:

| Metric | Target | Detection Method |

|---|---|---|

| Groundedness | 90% | LLM-as-judge checking context support |

| Answer Relevance | 85% | Does it address the question? |

| Completeness | 80% | All context info included? |

| Format Compliance | 95% | Follows output instructions |

LLM-as-Judge Prompt Template:

Given context: [retrieved chunks]

Question: [user query]

Answer: [generated response]

Does the answer:

1. Make claims supported by context? (Y/N + explanation)

2. Include unsupported information? (Y/N + what)

3. Miss relevant context information? (Y/N + what)

Hallucination Detection: Contradictions: claims directly against context. Unsupported: claims not in context. Both count as failures but require different fixes.

Testing Procedure: Generate answers for the test set. Run LLM-as-judge evaluation. Identify hallucination patterns. Adjust prompts, temperature, model, or context structure. Re-test until targets are met.

Common Failures and Fixes: Hallucinations → stronger prompts, lower temperature, better context. Incomplete → increase context chunks, improve prompt. Wrong format → structured output, examples in prompt.

Phase 3: End-to-End System Testing

Real-World Simulation: Complete RAG pipeline (retrieval plus generation). Test queries from staging or beta users. Include edge cases: ambiguous queries, no-answer scenarios, multi-intent questions.

Metrics to Measure:

| Metric | Target | How to Measure |

|---|---|---|

| Task Success Rate | 85% | Did system provide useful answer? |

| Answer Correctness | 90% | Factually accurate? |

| Latency (p95) | <2s | Total time user waits |

| Cost per query | Variable | Token usage → pricing |

A/B Testing Approach: Baseline: current implementation. Variant: with improvements. Compare success rate, latency, cost. Decision: keep improvements if e10% better on primary metric.

User Acceptance Testing: Deploy to a small beta group (5-10% of users). Collect explicit feedback (thumbs up/down). Monitor task completion, error rates, session abandonment. Iterate based on real user patterns.

Phase 4: Production Readiness Checklist

Before Launch, Validate:

Retrieval Quality:

- Precision@10 70% on test set

- Recall@10 70% on test set

- Retrieval latency p95 <500ms

- Hybrid search implemented (not pure vector)

- Contextual retrieval for chunks lacking context

Generation Quality:

- Groundedness 90% (no unsupported claims)

- Answer relevance 85%

- Hallucination detection in place

- Format compliance 95%

End-to-End System:

- Task success rate 85% on test set

- Total latency p95 <2s for interactive

- Cost per query within budget

- Fallback handling for empty retrievals

- "I don't know" responses when appropriate

Operational Readiness:

- Monitoring for retrieval quality drift

- Alerts for latency spikes (>3s p95)

- Alerts for error rate increases (>5%)

- Version control: chunks, embeddings, prompts, models

- Separate metrics: retrieval vs. generation failures

- Rollback plan if production issues arise

Continuous Improvement:

- Logging all queries + responses + scores

- Weekly review of failure patterns

- Monthly re-evaluation on updated test set

- Quarterly benchmark against BEIR/MTEB if applicable

Launch when all critical items are checked.

Common Implementation Mistakes

Even with the right decisions, execution matters. These mistakes can turn good architecture into production failure. Lessons come from real deployments Kapa.ai's analysis of 100+ teams, and the academic study of three production systems.

Mistake 1: Building RAG Without Evaluation Framework

The Problem: Ship fast, iterate based on user complaints. No way to know if improvements help or hurt. Can't reproduce issues users report.

Why It Falls: 60% accuracy in production gets discovered too late. Hours get spent debugging without systematic data. Changes break things unpredictably.

The Fix: Implement Phase 1-3 testing before launch. Log all queries, retrievals, and responses in production. Weekly evaluation on the test set catches drift.

Mistake 2: Optimizing Generation When Retrieval Is Broken

The Problem: Bad answers lead to tweaking prompts, adjusting temperature, trying different models. The root cause gets ignored: retrieval returning irrelevant chunks.

Why It Fails: Garbage in, garbage out. No prompt fixes bad retrieval. Time gets wasted on the wrong problem. Hallucinations get generated trying to answer from poor context.

The Fix: Always evaluate retrieval separately first. Fix Precision@10 <70% before touching generation. Measure groundedness to confirm issue location.

Mistake 3: One-Size-Fits-All Chunking Strategy

The Problem: Fixed 500-token chunks for all document types. Tables, code, and PDFs all get treated the same.

Why It Falls: Tables split mid-row (Failure Point #4: Not Extracted). Code fragments break semantic meaning. PDFs with mixed content chunk inconsistently.

The Fix: Document type detection. Different chunking strategies per type. Test chunking quality before embedding.

Mistake 4: No Fallback for Empty Retrieval

The Problem: Assume retrieval always finds something relevant. The LLM generates from irrelevant or empty context.

Why It Falls: Hallucinations (Failure Point #1: Missing Content). Users lose trust when clearly wrong answers get provided.

The Fix: Detect retrieval scores below threshold. "I don't have information about that" response. Don't hallucinate to avoid admitting ignorance.

Mistake 5: Assuming Vector Search Always Works

The Problem: Pure vector similarity for all queries. Semantic search solves everything, right?

Why It Fails: Exact keyword matches get missed IDs, names, numbers. Domain-specific terms get poorly captured. (Failure Point #2: Missed Top-Ranked Documents).

The Fix:Hybrid search with BM25 plus vector. Not optional for production. Test on queries with exact match requirements.

Mistake 6: Not Monitoring Retrieval Quality Drift

The Problem: Launch with 85% task success rate. Months later: 60% success rate. Nobody noticed the gradual degradation.

Why It Falls: The knowledge base grows, and relevant chunks rank lower. User query patterns evolve. Embedding drift occurs as the corpus changes. Academic research: "Robustness evolves rather than gets designed in."

The Fix: Weekly evaluation on a fixed test set. Alert if task success <80% for 3 consecutive days. Monthly review of new query patterns. Version everything track what changed when quality dropped.

Production Deployment and Scaling

Testing passed. Ready to launch. Production introduces new challenges: scale, cost, latency, drift. The framework: start small, monitor intensely, scale gradually.

Infrastructure Decisions

Vector Database Selection:

| Database | Best For | Key Strength | Cost |

|---|---|---|---|

| Pinecone | Convenience > control | Managed, auto-scaling | $500+/mo at scale |

| Qdrant | Cost-conscious, mid-scale | Open-source, fast filtering | Self-host or managed |

| Weaviate | Hybrid search focus | OSS + managed, modular | Flexible pricing |

| PostgreSQL+pgvector | Existing Postgres users | Familiar SQL, no new infra | Free (open-source) |

Decision criteria: Under 10M vectors, any option works. Already using Postgres: pgvector. Budget constrained: Qdrant self-hosted. Need SLAs plus convenience: Pinecone. Hybrid search critical: Weaviate.

Framework Selection:

| Framework | Best For | Why |

|---|---|---|

| LlamaIndex | Retrieval-focused RAG | 35% accuracy boost, retrieval specialists |

| LangChain | Complex workflows | Multi-step orchestration, mature ecosystem |

| Both | Large projects | LlamaIndex for retrieval, LangChain for orchestration |

Deployment Architecture: Kubernetes for orchestration. Distributed vector DB with sharding. Global deployment if latency <200ms is required. Custom auto-scaling based on RAG-specific metrics, not just CPU.

Cost Management

Primary Cost Drivers: LLM inference (largest): output tokens run 3-5x more expensive than input tokens. Storage (sneaky): scales with corpus size. Reranking (if used): GPU compute for cross-encoders.

Optimization Strategies: Caching: 15-30% cost reduction through semantic caching for similar queries. Token management: eliminate boilerplate, trim context. Model routing: cheaper models for simple queries. Batching: process similar queries together. RAG versus non-RAG: approximately 10x cost reduction by reducing token usage.

Monitoring: Cost per query tracking. Alert if daily cost exceeds 120% of baseline. Weekly review of expensive queries.

Latency Optimization

Component Latency Breakdown: Retrieval: <500ms (p95 target). Reranking: +200-300ms if using cross-encoder. LLM inference: variable (depends on output length). Total target: <2s for interactive, <5s for async.

Optimization Techniques: Streaming: display partial response immediately (perceived latency reduction). Parallel processing: fetch from vector DB plus LLM simultaneously for multiple retrievals. Multiphase ranking: cheap first-pass, expensive reranking only on top-k. Caching: instant response for common or similar queries.

Infrastructure: Distribute globally if serving international users. Async workflows for non-urgent queries. Databricks Online Tables for low-latency lookups.

Monitoring and Continuous Improvement

Production Monitoring Setup

Essential Observability (2025 Stack):

Component-level instrumentation: retrieval latency, precision, recall per query. Generation latency, groundedness, relevance per response. End-to-end task success, total latency, cost.

Real-Time Alerts: Retrieval precision <70% for more than 10 queries per hour. Latency p95 exceeds 3s for more than 5 minutes. Error rate exceeds 5% for more than 5 minutes. Cost per query exceeds 2x baseline for more than 1 hour.

Drift Detection: Weekly: run the test set, compare to baseline. Monthly: analyze new query patterns. Quarterly: re-evaluate chunking and embedding decisions.

Tools: Arize and WhyLabs for embedding drift detection. Datadog LLM Observability for hallucination flagging within minutes. Evidently: open-source, 25M+ downloads, development plus production. Custom: log everything to a data warehouse for SQL analysis.

Continuous Improvement Loop

Weekly Review Process: Evaluate test set performance (catch drift early). Review failure patterns from production logs. Identify: are failures retrieval or generation? Update the test set with new failure cases. Implement fixes, measure improvement.

Monthly Deep Dive: Analyze new query patterns in production. Update the test set to cover new patterns. Benchmark current performance versus 30 days ago. Decide: are architectural changes needed? (re-chunk, retune, etc.)

Quarterly Strategic Review: Evaluate: is the current stack still optimal? Research: new techniques (like contextual retrieval in 2024). Benchmark: against BEIR or MTEB if applicable. Plan: major improvements for the next quarter.

Success Metrics: Task success rate trend (target: 85% sustained). P95 latency trend (target: <2s sustained). Cost per query trend (target: flat or decreasing). User satisfaction (thumbs up/down, NPS).

Next Steps and Production Readiness

What Matters for Production

The seven critical decisions: chunking strategy (semantic, hierarchical, sentence window). Embedding model (generic versus fine-tuned). Retrieval approach (hybrid search required). Reranking (when precision is critical). Context management (quality over quantity). Evaluation framework (separate retrieval from generation). Fallback handling (admit "I don't know").

Testing framework: Phase 1 retrieval quality (Precision@10 70%). Phase 2 generation quality (Groundedness 90%). Phase 3 end-to-end (Task success 85%). Phase 4 production checklist (all critical items).

Advanced techniques: Contextual retrieval (35-67% improvement). Query transformation (HyDE, expansion). Agentic RAG (complex workflows).

The Complete Production Picture

RAG architecture is one piece. Production readiness also requires:

Observability: monitoring, tracing, alerting. Error handling: retries, circuit breakers, graceful degradation. Cost management: token optimization, caching strategies. Security: data privacy, PII handling, access control. Scaling: load balancing, auto-scaling, global deployment. Compliance: GDPR, SOC 2, industry-specific requirements.

Each area has similar complexity to RAG architecture. For comprehensive guidance covering all these areas, the AI Go-Live Plan provides the complete production readiness framework.

Next Action

Building a first RAG system: Start with semantic chunking, hybrid search, and contextual retrieval. Implement the evaluation framework (Phase 1-3) before launch. Use the checklist to avoid six common mistakes. Launch to 5-10% of users, monitor intensely, iterate.

Fixing an existing RAG: Implement evaluation first (measure current state). Separate retrieval from generation issues. Fix retrieval before generation. Add monitoring to prevent regression.

Not sure if RAG architecture is production-ready?

The difference between RAG systems that work in demos and those that work in production comes down to these seven architectural decisions. Make them right, validate systematically, and monitor continuously. Production success follows.

Frequently Asked Questions

When should I use RAG instead of fine-tuning?

RAG works when answers exist in documents but change frequently. Fine-tuning works when behavioral patterns need to be learned. Most applications need RAG for knowledge retrieval plus fine-tuning for task-specific behavior. The costs differ significantly: RAG costs scale with usage (per query), fine-tuning costs are one-time but require labeled data.

How long does implementation take?

Prototype to production: 6-12 weeks for a focused team. Week 1-2: chunking strategy and embedding selection. Week 3-4: retrieval and evaluation setup. Week 5-6: generation quality and context optimization. Week 7-8: testing framework and production hardening. Week 9-12: deployment, monitoring, and iteration. Teams skipping evaluation frameworks add 4-8 weeks of debugging later.

Which vector database should I choose?

Under 10M vectors: any option works. Already using Postgres: start with pgvector. Budget constrained: Qdrant self-hosted. Need managed service with SLAs: Pinecone. Hybrid search critical: Weaviate. Start simple, migrate later if needed. Database choice matters less than chunking and retrieval strategy.

When should I implement reranking?

After fixing basic retrieval quality first. Reranking improves precision when initial retrieval already achieves 70%+ recall. High-stakes applications (legal, medical, financial) justify the added latency and cost. Low-stakes applications (FAQs, internal search) often don't need it. Test with and without to measure actual improvement.

How do I know if my RAG system is production-ready?

Check the production readiness checklist: Precision@10 ≥70%, Recall@10 ≥70%, Groundedness ≥90%, Task success rate ≥85%, Latency p95 <2s, Fallback handling for empty retrievals, Monitoring for quality drift, Separate metrics for retrieval and generation. Launch to 5-10% of users first, monitor intensely, then scale gradually.

What's the most common mistake when building RAG systems?

Optimizing generation when retrieval is broken. Poor retrieval makes good generation impossible. Always evaluate retrieval separately first. Fix Precision@10 before touching prompts or models. The second most common mistake: no evaluation framework, leading to 60% accuracy discovered after launch instead of during testing.

When should I consider agentic RAG instead of traditional RAG?

Multiple heterogeneous data sources requiring different retrieval strategies justify agentic patterns. Complex workflows needing multi-step reasoning (find contract, extract clauses, compare to policy, summarize conflicts) benefit from agents. Master traditional RAG first. Agentic patterns add complexity that only pays off when traditional RAG can't handle the workflow.

How do I handle hallucinations in production?

Separate groundedness evaluation from relevance. Detect low retrieval scores and respond with "I don't have information about that" instead of generating from poor context. Implement LLM-as-judge checking for unsupported claims. Use lower temperature (0.0-0.3) for consistency. Monitor hallucination rate and alert when it exceeds thresholds. Never let the system prefer hallucination over admitting ignorance.

Can I migrate from one vector database to another later?

Yes, but plan for re-embedding costs and downtime. Most migrations take 1-4 weeks depending on corpus size. Store raw documents separately from embeddings to enable re-indexing. Use OpenTelemetry-compatible tools to avoid vendor lock-in at the observability layer. Test new database with production queries before full migration. Run both systems in parallel during transition.

Related guides:

- You Can't Fix What You Can't See: Production AI Agent Observability Guide – Set up monitoring and tracing for RAG systems

- 5 Biggest AI Agent Fails and How to Avoid Them – Common production pitfalls and prevention strategies

- AI Agent LLM Selection – Choose the right model for RAG generation

- Why Voice Agents Sound Great in Demos but Fail in Production – Demo-to-production gap analysis for AI system

Read next

Why AI Agent Prototypes Fail in Production (And How to Fix It)

You Can't Fix What You Can't See: Production AI Agent Observability Guide

Comments